Field-first Agentic AI for Real-world Vision

Qtana.ai is a field-first Agentic AI company solving the problem where on-site video data fails to translate into real AI improvement.

We enable Vision AI systems to continuously learn from the field— securely, selectively, and autonomously.

Problem

-

Lack of Field Data Utilization

Most on-site video data is never used to improve AI models, despite containing critical real-world signals. -

Security & On-premise Constraints

Strict security and on-premise environments severely limit how data can be collected, transferred, and reused. -

One-shot AI Architecture

Conventional Vision AI is brittle— trained once, deployed once, and vulnerable to environmental change.

Vision AI should evolve with the field. Not stay frozen after deployment.

Product

Qtana.ai provides an end-to-end Agentic DataOps platform that transforms on-site video data and model inference results into high-quality training data for continuous Vision AI improvement.

How It Works

-

Field Data & Inference Analysis

We analyze on-site video data and inference results from already deployed AI models to diagnose real-world environments and model behavior in the field. -

Performance-driven Data Collection

Based on the analysis, we identify what data is missing and collect only the data required to improve model performance. -

Data Analysis & Recommendation

Collected data is analyzed to determine which data will have the highest impact on model accuracy and robustness. -

Data Refinement & Production

We deliver AI-ready, high-quality datasets through data labeling and quality filtering— ready for training and validation.

From field signals to model-ready data.

Target Domains

Qtana.ai is designed for industries where on-site Vision AI is critical:

- Manufacturing

- Public Surveillance

- Construction

- Agriculture

Technology

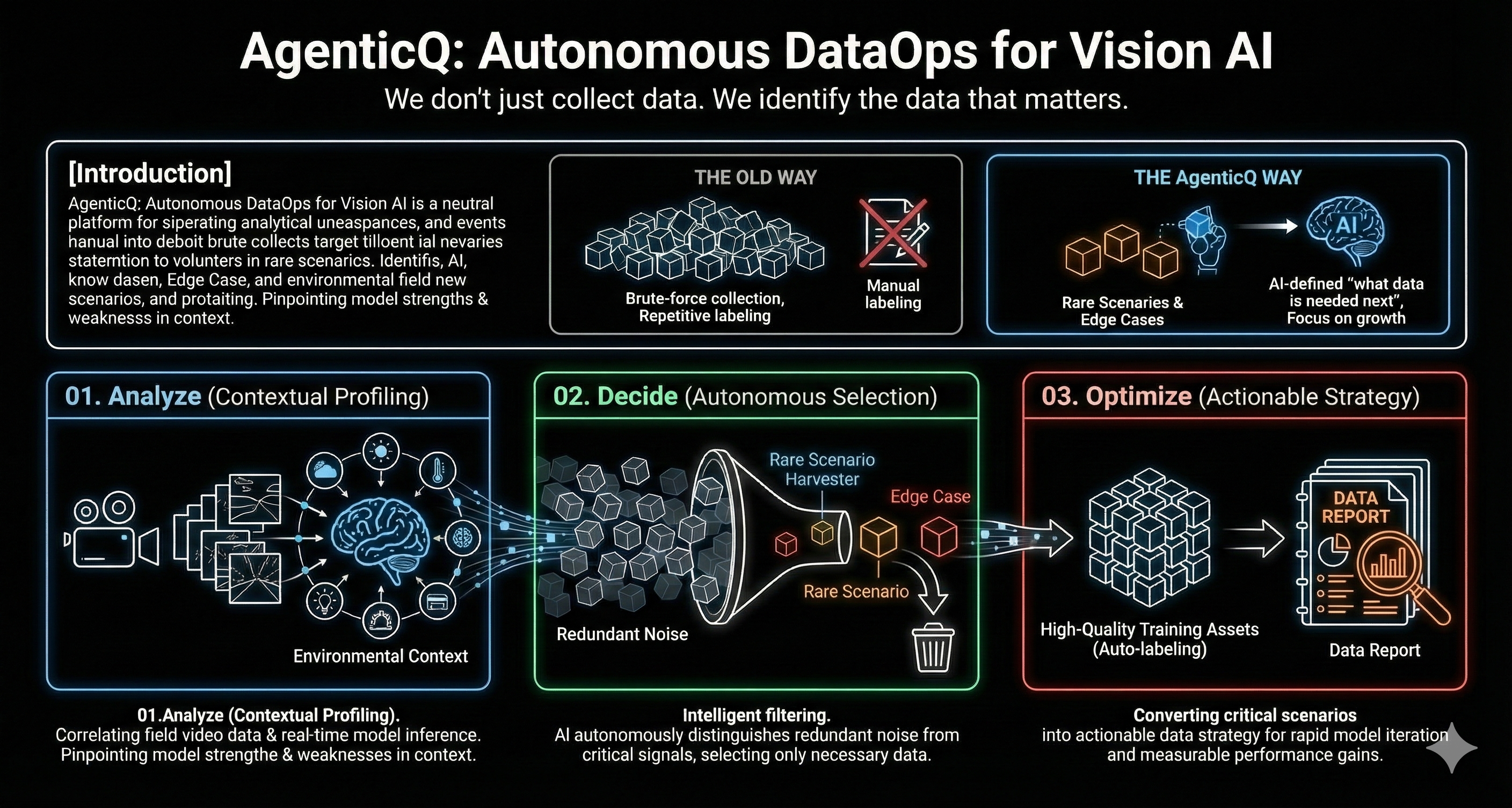

We don't just collect data. We identify the data that matters.

Introduction

AgenticQ is an Agentic AI platform that fundamentally changes how vision models improve. By continuously analyzing real-world footage alongside model inference results, it autonomously identifies and selects only the high-value data essential for tangible performance gains.

- The Old Way: Relying on brute-force collection and repetitive labeling of massive, generic datasets.

- The AgenticQ Way: The AI actively defines “what data is needed next,” focusing resources solely on rare scenarios and edge cases that drive growth.

AgenticQ Architecture

How It Works

-

Analyze — Contextual Profiling

We analyze the context, not just the image. By correlating field video data with real-time model inference outputs, AgenticQ pinpoints where the model excels and where it fails under specific environmental conditions. -

Decide — Autonomous Selection

Our Rare Scenario Harvester acts as an intelligent filter, autonomously separating redundant noise from critical signals, and selecting only the scenarios required for retraining. -

Optimize — Actionable Strategy

AgenticQ converts these critical scenarios into high-quality training assets—leveraging auto-labeling— to provide an immediately actionable data strategy for rapid model iteration.

Contact

Send us a message and we’ll get back to you.

Location

Qtana, 133-ho, 1F, 54 Changeop-ro, Sujeong-gu, Seongnam-si, Gyeonggi-do, Republic of Korea